This article is built from a presentation originally created by my good friend Romildo Cumbe to demystify containers. I couldn’t resist to the creativity and the beautiful sequence the man created. Let’s see if I can successfully leverage from it to clear and smoothly explain you what containers are, why they were invented, how they were invented and what exactly enabled their creation. This is NOT an article at all. It’s more of a story, a journey of 5 Chapters, shaped in a way to make it easier and fun to assimilate the complexity of containers. Think of the kind of articles Dan Brown would write and then lower your expectations a bit. If you find this painful to read to the end, then I have failed. Grab some popcorn, cause this is a huge TL;DR, but hopefully exciting.

Chapter 1 – Genesis and the crave for isolation

In the beginning God made the Developer and then the Developer made applications and then he deployed those applications to a computer that God made for him. A computer that wouldn’t turn off, it was known as a Server.

But an issue rose when the Developer was asked to build more applications. The Developer got stressed. Because all those applications would run on that same machine, and that caused 2 majors problems:

- Dependency – if he installs something that requires a restart all services would have to be shut down. And sometimes he couldn’t update a software running on the server, just because one of the applications running on it required a particular version. Running different versions of the same software in one server wasn’t impossible, but it created another problem:

- Mess – I’m guessing i don’t have to elaborate on this one

But you can clearly see that the developer craved for isolation; A solution that would allow him to isolate his applications. A solution came up quickly:

God then gave him multiple computers. That way he could deploy some apps to server A and then other to Server B and so forth.

Better Organization & Independency were achieved. That solved his problem. So he was happy.

Chapter 2 – The Ghost Machines

Years passed and the Developer was thriving with his isolation of servers methodology, but sadly, it didn’t last very long:

You can pretty much get an idea of what happened. Toooooooooo many servers. Not a healthy life. The solution he was given created another problems for him. And he couldn’t stand anymore that Huge room full of computers with cables breaking + power oscillation all the time; He wanted a better life; And honestly, his boss was also not happy with how much money he was spending now on electricity and on rent. Nobody was happy anymore.

God said to the developer: Look, let’s get back to the old model, in which you only have one machine; But inside that one machine you will have multiple other machines: Ghost machines;

Machines that don’t really exist, but they will seem to exist for you. As they are some kind of programs inside the actual machine;

That statement broke the Developer’s brain; He couldn’t understand it, he asked for an explanation, so God explained as follows:

Operating systems and Hardware

A computer is pretty much made useful by an Operating system. The Operating system installed on the computer talks to the hardware, calling the shots. It tells complicated things to your monitor like where to paint and what to paint. It tells your disk to spin that much so it reads a specific position. Also, for the record, an operating system has a component denominated kernel, which is the central nervous on which everything depends.

What we are going to do is to create a software that emulates hardware. A hypervisor. This hypervisor will pretend to be a real hardware while being just a software running on top of real hardware. The operating system won’t even notice that it is a fake hardware, we will be able to install and use it on top of that fake hardware that we called Hypervisor.

The hypervisor will be able to emulate multiple machines on top of the same hardware, which will make it easier for you, since you will have multiple machines in one single physical machine. We just need one big and fat physical machine.

The developer was blown away. He was impressed with God’s idea. Ghost Machines a.k.a Virtual Machines. They proceeded with the Ghost Machines. The developer was happy again.

Chapter 3 – Torvalds’s Illusions & Solomon Hykes

A Lord of a distant realm known as Linus Torvalds had made a breakthrough. He invented a masterpiece. He named it Linux. It was a new Operating System.

That OS became very popular and had very good critics. It did things another Operating Systems such as Microsoft Windows could not, and those things consisted on a bunch of illusions that could be used to lie to processes about their surroundings: the filesystem, the network, the available devices, etc. These illusions where present in the operating system for years and hadn’t been explored, until

A young man named Solomon Hykes, from an even further away land, leveraged from those Illusions to create a tool that would later be known as Docker – The First Container Engine.

But what exactly are these so called “illusions”?

The Linux containers building blocks

Containers are pretty much made of the following building blocks:

- chroot – an operation that changes the apparent root directory for the current running process and their children.

- namespaces – are a feature of the Linux kernel that partitions kernel resources such that one set of processes sees one set of resources while another set of processes sees a different set of resources.

- cgroups – is a Linux kernel feature that limits and isolates the resource usage (CPU, memory, disk I/O, network, etc.) of a collection of processes.

Namespaces and chroot are the most important and brilliant building blocks.

Chroot

With chroot, it is possible to pretty much pack a process with its own mini operating system. The mini operating system will have its own filesystem tree, meaning that when a process inside a a chroot tries to access a file, it only has visibility of the files packed in that chroot. The chroot has everything the process needs, all dependencies. If you think about it, most things in Linux, are manipulated as files, even environment variables. Remember the /etc/environment file? Yah, If we are creating an entire filesystem tree, it means we can just add that file to our tree and give that file the values we wish to set as environment variables. Those values will then be applied for the processes running inside our chroot. We pretty much have an isolated filesystem.

Event though we already have an isolated filesystem, a process needs more than that. It needs networking. We need isolation on that level too. Thats exactly where Namespaces come in.

Namespaces

With Namespaces, it’s possible to create different network configurations for different processes, amongst other things. By network configurations I mean Network Interfaces with their own settings (IP Address, Gateway, Mask, MTU, DNS, etc). As long as a process is attached to a networking namespace, it can only see and use what the namespace defined. Also, we can ensure that any child process created is automatically attached to the same namespace. As you can already guess, Running the ifconfig command attached to different namespaces, will produce different outputs. This is pretty much the networking isolation we wanted. There are other types of namespaces but we will stop here.

A combination of these building blocks is what gives us containers.

This breakthrough was stunning and it moved a lot of people. The developer noticed it, and he wanted to adhere. But THE question he asked was, how was Solomon Hykes creation better than the Ghost machines he was already using? Turns out someone could explain it:

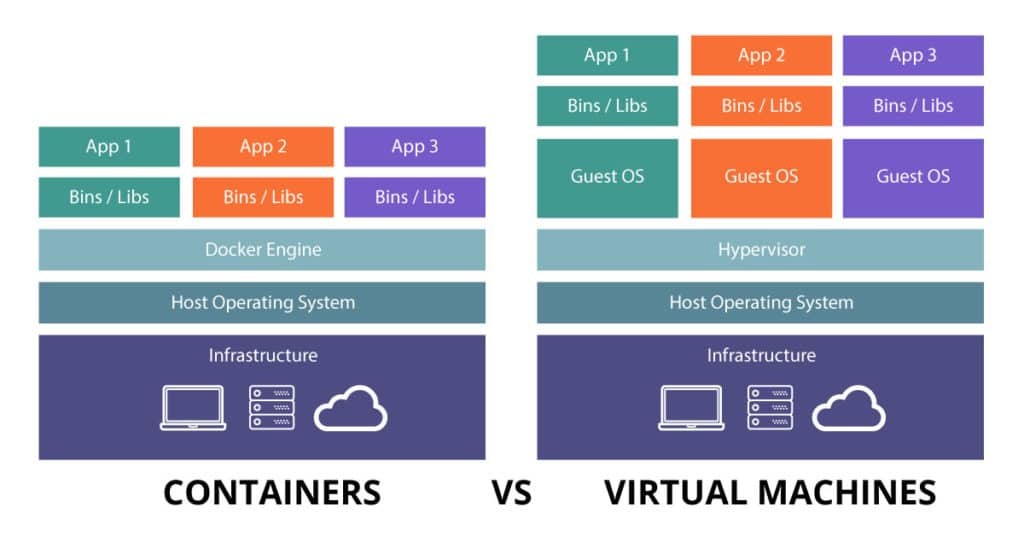

Containers vs Ghost Machines

Ghost Machines are bound to a Hypervisor. The way the Hypervisor works is: we install the hypervisor on a Host machine and then we create Ghost Machines in that hypervisor and then we use those Ghost machines the same way we would use real/physical machines. But those are full blown machines, with their own operating system and processes. So if we want to fully isolate two small applications, we would need two Ghost Machines. Can you imagine how much will we be spending on disk, CPU and RAM, just to run these two apps isolated? It’s a waste. Containers are much cheaper, cause we don’t spin up full blown machines. We spin up processes. Containers use the Host machine’s Kernel and they just pack what they need. This makes containers more efficient than Ghost Machines on every aspect, cause they won’t spend as much disk space as a full blown machine, neither RAM, nor CPU.

Let me put things in perspective for you to understand better:

Wow! Wait a minute! Docker Engine? What is that?

Wow! Wait a minute! Docker Engine? What is that?

Docker – beyond a container engine

Well, remember when I said that Linux has had those illusions for years and hadn’t been explored? Yah, thats the thing. Developers don’t want to be creating namespaces and chroots just to have an app running. They’d rather deal with the stress of using VMs. Thats where Docker comes in. When people use docker, they have no idea that there are namespaces being created behind the scenes. Docker created a package format that allows developers to seamlessly create a chroot package and to easily execute that package and bind it to networks, attach storage and all sorts of things namespaces can do. Everything I just said is what Container engines do. So you can assume that Docker-engine can be replaced by any other Container engine. But that is only true to some extent.

Docker doesn’t stop there, where most engines do. Solomon Hykes is too smart to just stop there. Hykes was a developer and he knew that not all developers work on Linux. He knew that some use Windows and others use Mac (I’m one of those). He wanted all of us to run containers on our dev machines, regardless of our OS. He gave himself the trouble of creating a lightweight hypervisor (Hyperkit) that Docker would use to spin up lightweight linux VMs behind the scenes, whenever running on Windows or Mac. So with Docker, you get much more than a Container engine. You get a seamless developer experience across different Operating systems.

Docker is much more than a container engine.

The Developer was pleased and satisfied, he had no option but to adhere it. So he did. But then, when he did, he noticed one advantage he couldn’t foresee before. One that would remove a heavy burden from his shoulders.

Chapter 4 – The Hurdles of shipment

There’s one untold story of the developer. It hadn’t been easy to move applications from his machine to the server. There were situations in which the application worked perfectly on this machine, but then, when moved to the server, it just wouldn’t work.

The thing is: A program is not one single thing, it has dependencies like : filesystem, environment variables, application servers, etc.

He had to document all the steps in order to then replicate on another computer.

Let’s see the following analogy as an example:

This shop depends on infrastructure: The walls, the environment, the electricity, etc. You would have to break and re-install it somewhere else.

This shop depends on infrastructure: The walls, the environment, the electricity, etc. You would have to break and re-install it somewhere else.

No alt text provided for this image

This way the shop doesn’t only RUN on your country, which in this case is the developer’s laptop.

So Containers were pretty much a way of the developer packing his apps with everything they needed in a way that he just shipped them anywhere as a single unit.

Gone were the days of the famous It works on my machine, problem.

This would have been the end of it. But we are humans, we just can’t have enough, can we?

Chapter 5 – Greedy

Back in the day, the developer just wanted to pack and run his apps in some efficient and consistent way. Containers kinda gave him that. But as he used containers and he started handling 100s of containers, he started having new ambitions:

- What if we group Multiple Hosts in some sort of cluster to run container on a big scale?

- What if the cluster was so fault tolerant that it could only die in some apocalipse disaster?

- What if containers were automatically started on another host whenever their current host dies or goes offline for maintenance?

- What if we could just scale up and down our app, across different Hosts by just changing a small config

- What if we didn’t stop there? What if we even made the scaling automatic?

- What if we did all of this regardless of the container engine being used?

- What if we automatically monitor the health of containers and then kill and start fresh whenever they get unhealthy?

- What if there was one open solution that could do all of this and companies could innovate on top of that?

- What if this solution didn’t require you to own the Hosts or even have access to them in order for you to deploy a container to it?

Yah, Kubernetes, Open Container Initiative, Thats how they happened!

Thus far the developer is satisfied. We know he will still crave for change, but for now, for what matters to us, he lived “Happily ever after”.

The end.

Some untold facts

It’s important to clarify a few things that where left to the side, as we went through the Journey. I figured it was easy to just not mention them, so that we wouldn’t loose focus.

Hypervisors

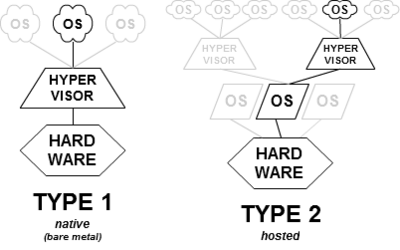

Not all hypervisors run on top of an operating system. There are two types of hypervisors: Type-1, and Type-2.

Windows containers

While containers where born on Linux, Microsoft did tweak windows to sort of offer primitives that could be used to create containers. Windows containers don’t use linux VMs, they are lightweight windows processes that run on top of Windows Kernel, Just Like Linux containers do. Working with Windows containers on Docker is as simple as working with Linux containers. Also, windows introduced something they called Windows subsystem for Linux, which is pretty much, Microsoft adding a Linux Kernel to their OS, with full API Compatibility (at least on the last version). This WSL thing, makes it easier to work with Linux containers on Windows, without the usual VM overhead.

Other container engines

There are other container engines out there, aside from docker. Just to name a few:

- LXC

- Rkt

- LXD

Virtual machines are not dead

Yah, with all the fancy and exciting things I have said about containers, we still need VMs. You don’t run containers on bare metal. Containers are processes and they run on OS. VMs can still be useful to run more complex workloads and even to provide an isolation between groups of containers.

Enough!

What a TL;DR! Yah, it took me a while to write this. I felt like I had to do this. Believe it or not. It’s very easy to find an article that explains how to use docker than it is to find one that puts all the pieces of the puzzle together to clearly explain: what is all of that? Hope I have shown to you that it’s not magic and that you now understand whats going on there.

Please don’t share nor put a like if you didn’t enjoy it.

Comentários Recentes